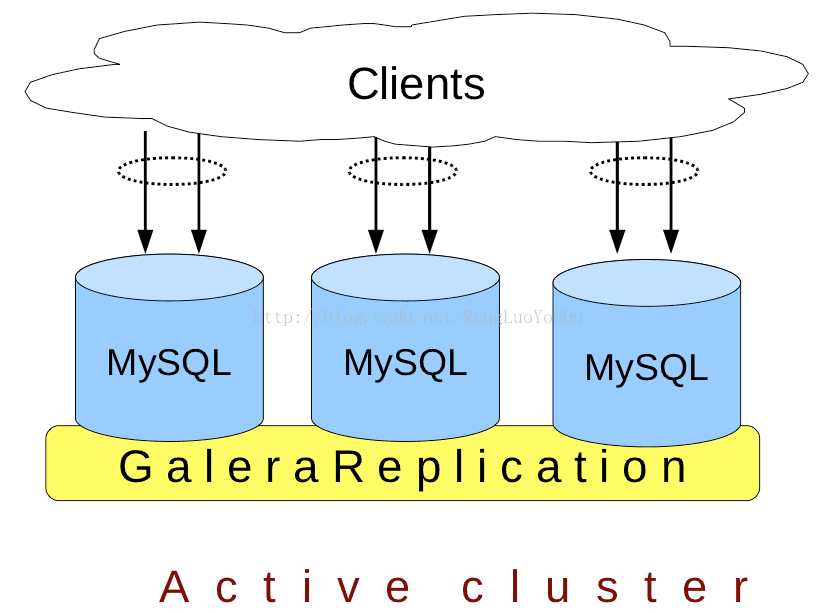

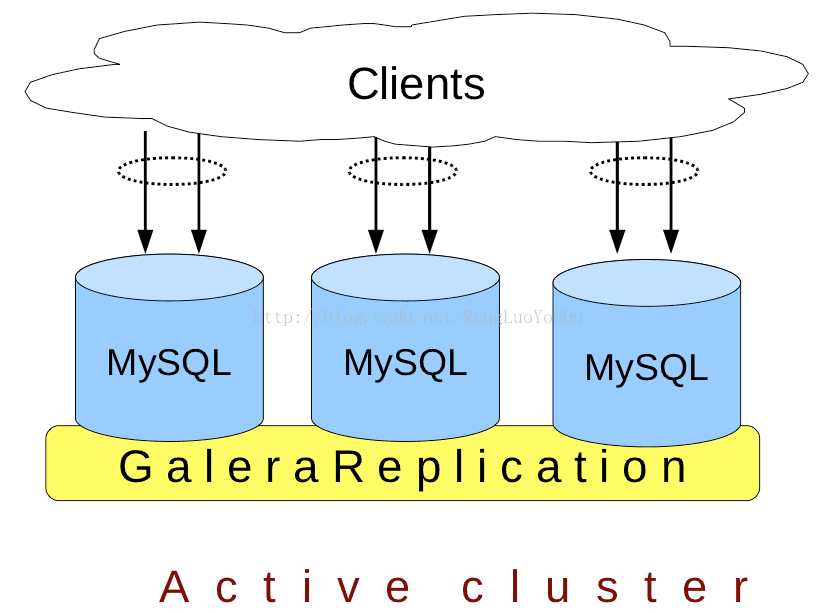

Galera cluster是一个多主同步数据库集群,基于同步复制技术和 Oracle 的 MYSQL/InnoDB。使用Galera Cluster时,您可以直接任意节点读取和写入。并且在丢失任何单个节点时可以不中断操作且无需处理复杂故障转移过程。

下面介绍如何为openstack环境配置mariadb galera高可用集群。

系统环境

主机配置

配置三个节点,使用openstack的控制节点

192.168.100.161 controller01

192.168.100.162 controller02

192.168.100.163 controller03

Haproxy配置

haproxy 添加以下配置

1

2

3

4

5

6

7

8

9

10

11

12

13

|

frontend vip-db

bind 192.168.100.160:3306

timeout client 90m

default_backend db-galera

backend db-galera

option httpchk

option tcpka

stick-table type ip size 1000

stick on dst

timeout server 90m

server controller01 192.168.100.161:3306 check inter 1s port 9200 backup on-marked-down shutdown-sessions

server controller02 192.168.100.162:3306 check inter 1s port 9200 backup on-marked-down shutdown-sessions

server controller03 192.168.100.163:3306 check inter 1s port 9200 backup on-marked-down shutdown-sessions

|

其中 192.168.100.160 是 vip 地址

数据库集群的安装

安装和配置组件(所有节点)

- 安装软件包

1

|

# yum install -y mariadb mariadb-galera-server mariadb-galera-common galera rsync xinetd

|

- 创建 /etc/my.cnf.d/openstack.cnf 文件,加入以下内容

1

2

3

4

5

6

7

8

9

10

|

# vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 192.168.100.161 #本机管理ip

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

|

- 修改 mariadb 最大连接数

- 修改 /usr/lib/systemd/system/mariadb.service 文件加入以下内容

1

2

3

4

5

|

# vim /usr/lib/systemd/system/mariadb.service

[Service]

LimitNOFILE=10000

LimitNPROC=10000

|

1

|

# systemctl --system daemon-reload

|

配置galera cluster(所有节点)

编辑 /etc/my.cnf.d/galera.cnf 文件,修改如下内容

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

# cat > /etc/my.cnf.d/galera.cnf << EOF

[mysqld]

skip-name-resolve=1

binlog_format=ROW

default-storage-engine=innodb

innodb_autoinc_lock_mode=2

bind-address=192.168.100.161

wsrep_on=1

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_provider_options="pc.recovery=TRUE;gcache.size=300M"

wsrep_cluster_name="openstack_cluster"

wsrep_cluster_address="gcomm://controller01,controller02,controller03"

wsrep_node_name="controller01" #主机名

wsrep_node_address="192.168.100.161" #ip地址

wsrep_slave_threads=1

wsrep_certify_nonPK=1

wsrep_max_ws_rows=131072

wsrep_max_ws_size=1073741824

wsrep_debug=0

wsrep_convert_LOCK_to_trx=0

wsrep_retry_autocommit=1

wsrep_auto_increment_control=1

wsrep_drupal_282555_workaround=0

wsrep_causal_reads=0

wsrep_notify_cmd=

wsrep_sst_method=rsync

wsrep_sst_auth=root:

EOF

|

配置 haproxy 健康检查(所有节点)

- 安装 xinted 服务

1

|

# yum install xinetd -y

|

- 登录数据库,创建 clustercheck 用户,并设置其本地访问数据库的权限

1

2

3

|

# systemctl start mariadb.service

# mysql -e "CREATE USER 'clustercheck'@'localhost' IDENTIFIED BY 'root1234';"

# systemctl stop mariadb.service

|

- 为 clustercheck 用户创建配置文件 /etc/sysconfig/clustercheck

1

2

3

4

5

6

|

# cat > /etc/sysconfig/clustercheck << EOF

MYSQL_USERNAME="clustercheck"

MYSQL_PASSWORD="root1234"

MYSQL_HOST="localhost"

MYSQL_PORT="3306"

EOF

|

- 创建个配置 HAProxy 监控服务 /etc/xinetd.d/galera-monitor

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

# cat > /etc/xinetd.d/galera-monitor << EOF

service galera-monitor

{

port = 9200

disable = no

socket_type = stream

protocol = tcp

wait = no

user = root

group = root

groups = yes

server = /usr/bin/clustercheck

type = UNLISTED

per_source = UNLIMITED

log_on_success =

log_on_failure = HOST

flags = REUSE

}

EOF

|

- 启动 xineted 并设置开机自启

1

2

3

|

# systemctl daemon-reload

# systemctl enable xinetd

# systemctl start xinetd

|

完成安装

创建数据库集群

1

|

# pcs resource create galera-cluster ocf:heartbeat:galera enable_creation=true wsrep_cluster_address="gcomm://controller01,controller02,controller03" additional_parameters='--open-files-limit=16384' enable_creation=true meta master-max=3 ordered=true op promote timeout=300s on-fail=block --master

|

设置资源依赖

1

|

# pcs constraint order start haproxy-clone then galera-cluster-master

|

验证

查看 pacemaker 资源

1

2

3

4

|

# pcs resource

……

Master/Slave Set: galera-cluster-master [galera-cluster]

Masters: [ controller01 controller02 controller03 ]

|

查看集群状态

1

2

3

4

5

6

7

8

9

|

MariaDB [(none)]> SHOW STATUS like 'wsrep_cluster_%';

+--------------------------+--------------------------------------+

| Variable_name | Value |

+--------------------------+--------------------------------------+

| wsrep_cluster_conf_id | 3 |

| wsrep_cluster_size | 3 |

| wsrep_cluster_state_uuid | 83d58f96-e6e3-11e8-82ab-87f7bf579cc6 |

| wsrep_cluster_status | Primary |

+--------------------------+--------------------------------------+

|

wsrep_cluster_size 为 3 集群成功创建。